The end of the year, especially in technology, is a time for the pundits to sit down and prognosticate lists of trends and predictions for the upcoming year. Go ahead - search “AI predictions 2025”, or “AI trends 2025” etc and you will get page upon page of results. With the speed and variety of change that has come to signify AI, especially in the last 2 years, one wonders if there are enough grains of salt to carry the predictions through.

This take is slightly nuanced. Imagine artificial intelligence as a teenager navigating the complex social landscape of technological development - full of potential, occasional brilliance, and more than a few awkward moments. The year 2024 was exactly that for AI, it was a transformative period of growth, challenge, and unexpected revelations. So, instead of trying vainly to predict what may happen in 2025, I decided to take a look back, a retrospective, a highlights reel of all things AI in 2024 - when I saw AI coming of age. Or did we? Let’s go!

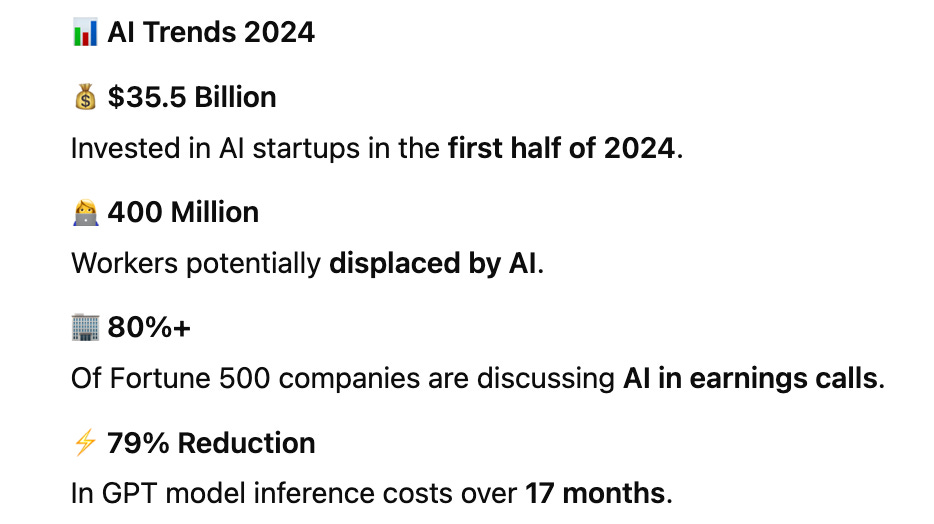

1. The AI investment landscape painted a picture of both wild enthusiasm and careful calculation. In just the first six months of 2024, a jaw-dropping $35.5 billion flooded into AI startups [1] - a nearly twelve-fold increase from the $3 billion invested in 2022. AI deals continued to dominate venture funding during the third quarter. AI companies raised $18.9 billion in Q3 [2]. This wasn't just money; it was a collective bet on a technological future that was simultaneously exciting and uncertain.

Meanwhile (over) valuation took on new meaning - Nvidia discussed Acquiring Software Startup OctoAI for $165 Million[3]. To put it in perspective Octo had been funded well ($130M at last count) and valued at 900M. Octo caved in (literally days after the above news came), and agreed to be acquired for 250M![4]

2. The AI narrative saw tech leaders emerge as the most eloquent champions[5] of this complex journey. Google's Sundar Pichai articulated a philosophy that became the year's most quoted perspective on technological investment: "The risk of underinvesting is dramatically greater than the risk of overinvesting."

Meta’s Mark Zuckerberg chimed in - "I'd rather risk building capacity before it is needed, rather than too late, given the long lead times for spinning up new infrastructure."

Amazon CEO Andy Jassy piped in with “I think that the reality right now is that while we're investing a significant amount in the AI space and in infrastructure, we would like to have more capacity than we already have today.”

Not to be outdone, Satya Nadella of Microsoft chimed in with “long-term asset, which is land and the data center, which, by the way, we don't even construct things fully. We can even have things which are semi-constructed... We know how to manage our CapEx spend to build out a long-term asset and a lot of the hydration of the kit happens when we have the demand signal.”

It was a bold stance that resonated across Silicon Valley and beyond, acknowledging the transformative potential of AI while remaining pragmatically cautious.

3. The reasoning capabilities of AI models reached near-miraculous levels that would have seemed like science fiction just a few years earlier. CoT [6] + RL combined to deliver reasoning capabilities. OpenAI's GPT4-o1-preview models weren't just incremental improvements - they were stellar leaps in machine intelligence[7][8][9]. Consider these mind-bending achievements: ranking in the 89th percentile of competitive programming challenges, placing among the top 500 U.S. students in advanced mathematics examinations, and achieving PhD-level accuracy in scientific reasoning. These weren't just technological improvements; they were fundamental shifts in what we believed machines could accomplish.

As if that was not good enough, very recently, we saw OpenAI announce newer o3 and o3-mini models on the last day of their 12-days-of-Christmas announcements. These are more than an incremental improvement in AI capability. One could say intelligence hit a wall and decided to go vertical, such is the exponential nature of improvement with the o3 family of models across every benchmark.

Figure 1: OpenAI o3 Breakthrough High Score on ARC-AGI-Pub[10]

Can we call this AGI or early AGI - I will let you decide. There is already enough noise in the system pointing out that ARC-AGI is a misnomer - Even Chollet acknowledged this in his blog, saying “it’s not an acid test for AGI”. At most the test is necessary for AGI but not sufficient. Some others suggested that what was actually done - pretraining on what they believe were hundreds of public examples - was NOT comparable to what humans require to solve similar problems. Meanwhile AGI cheerleaders countered ‘So you studied for the exam and did well. What is wrong with that picture?’ While we may never know the exact moment we actually achieve AGI, the debate around it will continue for the foreseeable future.

Elsewhere, equally compelling advances were made in the fields of image and video generation, in terms of multi-modality, AI search, robotics, agentic and edge AI technologies. They are beyond the scope of this article. Suffice it to say these developments are being watched keenly and the hope is to report back on these innovations in 2025.

4. But the path was not without problems; just ask the lawyers - For every technological marvel, there was an equally compelling story of limitation and challenge. The legal landscape began to reckon with AI's real-world implications in ways that were both fascinating and slightly terrifying. The Air Canada tribunal's decision to hold the company liable for a chatbot's incorrect information wasn't just a legal footnote - it was a watershed moment signaling that AI systems would be held to rigorous real-world standards[11].

This was further brought to light by cases like Barrows v. Humana[12] (a class action over AI-generated coverage denials) and Barulich v. Home Depot (challenging AI-powered privacy monitoring)[13]. These weren't just legal challenges - they were critical moments of defining technological boundaries and ethical frameworks while affording legal recourse and protection.

5. Meanwhile the potential economic implications and effect on human capital were nothing short of revolutionary - A McKinsey report[14] predicted a potential displacement of 400 million workers due to AI-related advancements - a statistic that simultaneously represented both incredible technological progress and profound societal challenge. Yet, in a remarkable twist, over 80% of Fortune 500 companies were now not just discussing AI, but leading with it in their strategic conversations.

6. As if on cue, a lot of talk centered around autonomous software development - The potential for AI agents to collaborate across project management, development, design, and testing roles. A survey of papers on Large Language Model-Based Agents for Software Engineering synthesized information from over 106 papers across software engineering tasks and agent frameworks[15]. While we are familiar with copilots for coding, combining SE tasks such as (requirements engineering, code generation, static code checking, testing, debugging) with agent frameworks where LLMs are involved in (planning for breaking down development and debugging tasks, memory for iterative code refinement and debugging, perception for input testing, action by interacting with external tools), implies we are on the threshold of fully autonomous project level software development (not code snippets or other such meaningful yet smaller tasks)

7. Yet when it came to practice, organizational challenges remained - Some of the top barriers preventing faster Generative AI implementation included Technical complexity, Data readiness, Organizational readiness, Talent scarcity, Regulatory uncertainty[16]

Figure 2: Barriers to moving faster with Generative AI[16]

8. The economics of AI itself underwent a remarkable transformation - Andrew Ng highlighted a statistic[17] that would have seemed impossible just months earlier - GPT model token costs plummeted from $36 to $4 per million tokens - a 79% reduction in just 17 months. This wasn't just a price drop; it was a fundamental democratization of technological capability.

The open-source movement emerged as an unexpected hero in this narrative. By separating model training from runtime, smaller players like Anyscale and Fireworks could suddenly compete with tech giants. It was a David versus Goliath moment in technological development, proving that innovation isn't the exclusive domain of billion-dollar corporations.

Meanwhile, companies such as Cerebras, Grok, SambaNova etc are quietly revolutionizing compute for deep learning. In the first half of the year[18] Cerebras led with the CS-3 which consumes 23kW peak compared with NVIDIA’s DGX B200 that consumes 14.3 kW. However, the CS-3 is significantly faster, providing 125 petaflops vs. 36 petaflops of the DGX B200. This translates to a 2.2x improvement in performance per watt, resulting in more than halved power expenses over the system’s operational lifespan.

Groq meanwhile had raised 640M[19] by mid year to meet the demand for fast inference using its proprietary LPUs. Groq has quickly grown to over 360,000 developers building on GroqCloud™, creating AI applications on openly-available models such as Llama 3.1 from Meta, Whisper Large V3 from OpenAI, Gemma from Google, and Mixtral from Mistral.

Not to be outdone, SambaNova coming out of Stanford University positions itself as efficient computing at scale[20] without running into limits when it comes to power and energy thirst of GPUs from NVIDIA. Further comparing themselves to NVIDIA SambaNova’s CEO Rodrigo Liang proclaimed that when compared to NVIDIA, they are able to drive 10X the performance at a tenth of the power consumption.

9. Despite these advancements, energy consumption related to AI grew and is projected to grow as if there is no tomorrow - Headlines such as ‘AI poised to drive 160% increase in power demand ',[21] and ‘AI already uses as much energy as a small country’,[22] became commonplace. Goldman estimates that AI will increase data center power consumption by 200 terawatt-hours (TWh) per year between 2023 and 2030, and back of the napkin calculations[23] indicate one query to ChatGPT uses approximately as much electricity as could light one light bulb for about 20 minutes.

10. Elsewhere OpenSource models continued to expand footprint while delivering comparable or better technologies - Users gravitated towards Open Source for a variety of AI needs[24] - including models for NLU tasks, vision, multi-modal capabilities, and a variety of other needs. Clear winners by likes and downloads in this category included Meta (llama family of models), Google (Gemma), Microsoft (phi), Cohere(CohereforAi), XAi (Grok), Stability (Stable Diffusion) and others.

Capping it all was the year end announcement of ModernBert[25] - a family of state-of-the-art encoder-only models representing improvements over older generation encoders across the board, with a 8192 sequence length, better downstream performance and much faster processing. ModernBERT is available as a slot-in replacement for any BERT-like model, with both 139M param and 395M param sizes. Unlike generative models that are mathematically “not allowed” to “peek” at later tokens (they can only ever look *backwards*) encoder-only models like BERT are trained so each token can look forwards *and* backwards, supposedly making them more accurate.

11. While ‘Big Brother’ stepped in time and again to rein in AI via policy. The US state by state legislation snapshot created by BCLP[26,27] is a great place to see where and how across each of the contiguous states AI policy is today. A similar trend ensued in the EU and UK[28], the result of which included multiple pieces of (proposed) legislation including The EU AI Act, The EU AI Liability Directive and the EU AI White Paper.

Meanwhile, the White House got into the act with its own executive order[29] ’to ensure that America leads the way in seizing the promise and managing the risks of artificial intelligence (AI). The Executive Order directed sweeping actions to manage AI’s safety and security risks, protect Americans’ privacy, advance equity and civil rights, stand up for consumers and workers, promote innovation and competition, advance American leadership around the world, and more’.

12. The Gartner Hype Cycle captured the overall journey perfectly - It appears we may have begun our steep descent from the Peak of Inflated Expectations into the Trough of Disillusionment. The creator of the Hype Cycle seems to agree, telling Yahoo Finance[30] recently “[Generative AI] is not yielding benefits that are in tune with the hype in the market, or in tune even with what customers were expecting out of this.

Figure 3: (Sample) Gartner Hype Cycle Framework

But this is not a story of failure; it is a story of maturation!

13. On that note we had some refreshing and honest assessment of the AI landscape - On slow product realization and monetization Zuckerberg said - "I don't think anyone should be surprised that monetization will take years."

And as Airbnb's Brian Chesky noted[31], “I think one of the things we've learned over the last, say, 18 months is that it's going to take a lot longer than people think for applications to change. What we need to do is we need to actually develop AI applications that are native to the model. No one has done this yet. It's going to take a number of years to develop this. And so it won't be in the next year that this will happen”

It was a moment of rare corporate humility, acknowledging that technological potential doesn't automatically translate to immediate financial return.

Translation - Buckle up. We just started riding this wave!

Epilogue - By year's end, (even with o3 and o3-mini in tow), AI wasn't the earth-shattering revolution some had predicted at least from a production and ROI perspective (benchmarks aside). Nor was it the dystopian threat as some others feared. It was something far more interesting - a technology finding its complex, nuanced place in our world, accelerating at a pace that was becoming obvious only after the fact.

In the grand narrative of technological evolution, 2024 will likely be remembered as the year AI truly began to grow up - still awkward, still learning, but undeniably more sophisticated, more integrated, and more promising than ever before. It wasn't a revolution; it was a remarkable, messy, utterly human process of technological becoming. The AI of 2024 represented a delicate balance of incredible promise and very real limitations.

~10Manager

References

Here’s the full list of 44 US AI startups that have raised $100M or more in 2024, Techcrunch (link)

Global Funding Slowed In Q3, Even As AI Continued To Lead, Crunchbase (link)

Nvidia Recently Discussed Acquiring Software Startup OctoAI for $165 Million (link)

Chip giant Nvidia acquires OctoAI, a Seattle startup that helps companies run AI models (link)

Sourced from commentary in Form 10Q of Alphabet, Meta, Amazon, Microsoft

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, arXiv (link)

In the ARC Prize challenge, both o1 models beat GPT-4o (link)

Cursor’s evaluation of o1 (link)

Cognition’s evaluation of o1’s reasoning capabilities with Devin (link)

OpenAI o3 Breakthrough High Score on ARC-AGI-Pub (link)

Airline held liable for its chatbot giving passenger bad advice - what this means for travellers, BBC (link)

AI Litigation Insights - Barrows et al. v. Humana (link)

Google Sued Over Recording Customer Service Calls to Home Depot, Bloomberg (link)

22 Top AI Statistics And Trends In 2024, Forbes (link)

Large Language Model-Based Agents for Software Engineering: A Survey, arXiv (link)

AI Survey: Four Themes Emerging, Bain (link)

Andrew Ng on the AI model pricing depreciation (link)

Cerebras CS-3 vs. Nvidia B200: 2024 AI Accelerators Compared (link)

Groq Raises $640M To Meet Soaring Demand for Fast AI Inference (link)

SambaNova - Power & Energy will be the AI bottleneck (link)

AI is poised to drive 160% increase in data center power demand, Goldman Sachs (link)

AI already uses as much energy as a small country. It’s only the beginning, Vox (link)

AI brings soaring emissions for Google and Microsoft, a major contributor to climate change, NPR (link)

Most liked and downloaded open source models from 2022 to today, Hugging Face (link)

Finally a replacement for Bert, Hugging Face (link)

US state-by-state AI legislation snapshot, BCLP Law (link)

Artificial Intelligence 2024 Legislation, NCSL (link)

AI regulation tracker: UK and EU take divergent approaches to AI regulation, BCLP Law (link)

Fact Sheet: Key AI Accomplishments in the Year Since the Biden-Harris Administration’s Landmark Executive Order (link)

The AI trade is losing its luster, Yahoo Finance (link)

Airbnb CEO: A New AI-Powered App Will Take ‘Years’, Skift (link)

Excellent synopsis of AI hype and reality. Found it interesting to read about all of it in one place.

Please keep it coming 😉.